In just last year, artificial intelligence and robotics has advanced by leaps and bounds as the distinction between man and machine begins to blur in troubling ways. Though the proponents of these advances in technology emphasize how robots and artificial intelligence will make life more convenient and comfortable, there is a darker side to all of this, with robots set to take over a majority of the world’s jobs in a matter of decades among other issues. The technology may have grown exponentially in recent years, yet any establishment of a system of related ethics has lagged far behind. There is no clearer example of this divide than a new study from Shanghai Jiao Tong University in China. The study’s authors have claimed that artificial intelligence is fully capable of deciding whether or not a person will be a criminal based solely on their facial features.

In a paper titled “Automated Inference on Criminality using Face Images,” two researchers from the university combed through the “facial images of 1,856 real persons” using computer software and “supervised machine learning.” They found that “some discriminating structural features for predicting criminality, such as lip curvature, eye inner corner distance, and the so-called nose-mouth angle.” Half of the images used were of convicted criminals, though the study does not mention the types of criminal offenses they committed. Ultimately, they concluded that these structural features of a person’s face “perform consistently well and produce evidence for the validity of automated face-induced inference on criminality, despite the historical controversy surrounding the topic.”

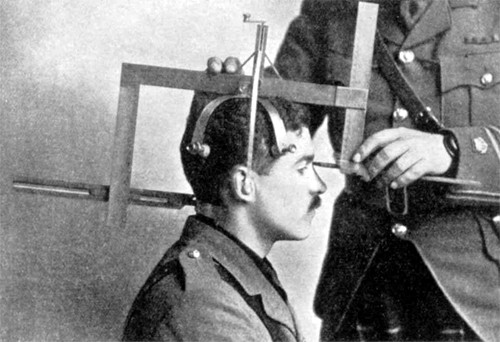

The authors are right to mention the “historical controversy” regarding their claims as attempts to correlate physiology with moral and intellectual qualities have been made by eugenicists and others looking to justify the superiority of particular racial groups for hundreds of years. Most popular among these was phrenology, the study of the shape of a person’s skull to determine a person’s character and intelligence. However, phrenology had lost popularity and credibility by the 1840s, with only those on the fringes of science or those desperate to “prove” their race’s superiority arguing its merits. However, governments, such as Nazi-led Germany and the colonial Belgian government in Rwanda, made use of phrenology in the 20th century, but with ghastly consequences, namely genocide. In an unexpected turn of events, the rise of artificial intelligence seems to have resurrected these same principles.

In the study, the authors argued that using computers to correlate human physiology and likely behavior will not fall victim to the racism prevalent in its past use as computers do not have the capacity for racism. The text of the study says “Unlike a human examiner/judge, a computer vision algorithm or classifier has absolutely no subjective baggages, having no emotions, no biases whatsoever due to past experience, race, religion, political doctrine, gender, age, etc., no mental fatigue, no preconditioning of a bad sleep or meal. The automated inference on criminality eliminates the variable of meta-accuracy (the competence of the human judge/examiner) all together.” However, the authors seems to neglect the fact that any algorithm is human-designed, meaning the potential for human bias is still very much present.

Another problems lies in the physiological make-up of a nation’s criminals. For example, in the United States, a majority of prisoners are black and latino due to the institutional racism of the American justice system. Any “self-learning” artificial intelligence program would associate the facial features of these races with criminality, making the division between criminal and non-criminal no different than divisions along ethnic or racial lines.

Also of concern is the potential for this technology’s abuse by law enforcement or governments. If other scientists decide to throw their support behind this discredited premise, the “scientific consensus” could be used as justification for their adoption by police states and militarized police forces looking to expand their practice of “predictive policing.” The US already has massive biometric databases containing the fingerprints, facial features, and DNA of millions of Americans. It would be a small jump to add a new algorithm classifying everyday Americans as potential criminals or non-criminals.

What are your thoughts? Please comment below and share this news!

This article (Scientists Claim AI Can Predict Criminal Behavior Based on a Person’s Facial Features) is free and open source. You have permission to republish this article under a Creative Commons license with attribution to the author and TrueActivist.com